We don't need to fix trust. We need to replace it with informed awareness.

The outdated systems of trust have broken democracy, not AI

Australia’s back in election mode which means the circus is back in town. Albo’s brandishing his Medicare card like it’s Frodo’s ring. Vauldermort Dutton’s pitching Chernobyl nuclear energy. Clive Palmer’s mumbling some shit about gendered bathroom manifestos. And Adam Bandt’s yelling about housing while voting against it.

But now there is this underworld of AI-generated spin, deepfakes, and synthetic media flooding every feed. Like my AI-generated image above. It’s not real, but it feels true.

The humble voter’s job isn’t just picking a leader, it's figuring out what’s even real.

Reality is a shared hallucination

You pause on a video. A voice that sounds like Joe Biden tells Democrats not to vote. Or you see a photo of Trump surrounded by smiling Black voters. Looks legit. Hits the vibe.

Did you stop and verify it? Of course not. Who has the time between the commutes to work, the school drop-offs, the relentless search for the cheapest grocery price, the dinners to cook, the whole life you want to live? You can’t be expected to investigate everything that pops up on your screen.

So instead of “Is this true?” you go with “Does this feel right to me?”

And with that, I would like to cordially welcome you to the age of ‘reality apathy’.

Welcome to the club

‘Reality apathy’ is a term coined by technologist Aviv Ovadya, and it’s what happens when people are so flooded with conspiracies, deepfakes, and manipulated content that they just give up.

They stop checking. They stop caring. And they retreat into their own bubbles.

And when everyone’s living in this custom feed that is built for their own emotions and biases, does ‘the truth’ even mean anything anymore?

This is where trust used to come in. You’d trust journalists, experts, and institutions.

Even if you disagreed, there was a shared baseline.

But that floor’s caved in.

The 2024 Edelman Trust Barometer says no major Australian institution clears 60% trust. Media sits at 38%. Government’s dropped to 45%. And there’s a 23-point gap between the elite “informed public” and the rest of us.

It’s broken.

But maybe that’s the wrong problem to solve.

What if trust isn’t something to fix, but something to outgrow?

If we think about it, our current idea of trust is based on gatekeeping.

We trusted because we had no choice. There was no access, no tools, no time, and so we outsourced belief to broadcasters, editors, and politicians.

But now the information floodgates are open. The gatekeepers are struggling to hold a line in a content universe that they weren’t built for.

Meanwhile, AI has entered the chat and is capable of creating, amplifying, and distributing content faster than humans can see it, process it, and understand it.

So I don’t think that the solution is to cling to the old idea of trust. To be honest, it’s a fragile belief in authority figures we no longer believe in. We need to let it go. Breathe in. Breathe it out. They are dead to us.

We need to take this opportunity to build something better.

We need something that works in the age of AI, emotional manipulation, and algorithmic persuasion.

Call it Active Verification. Call it Media Hygiene. Call it Collective Sense-Making.

Whatever the label, it needs to be:

Distributed: Everyone participates, not just journalists or watchdogs.

Transparent: We see how claims are made, sourced, and verified live, in real time.

Teachable: Kids in Finland learn media literacy from kindergarten. Why isn’t it in the Australian curriculum?

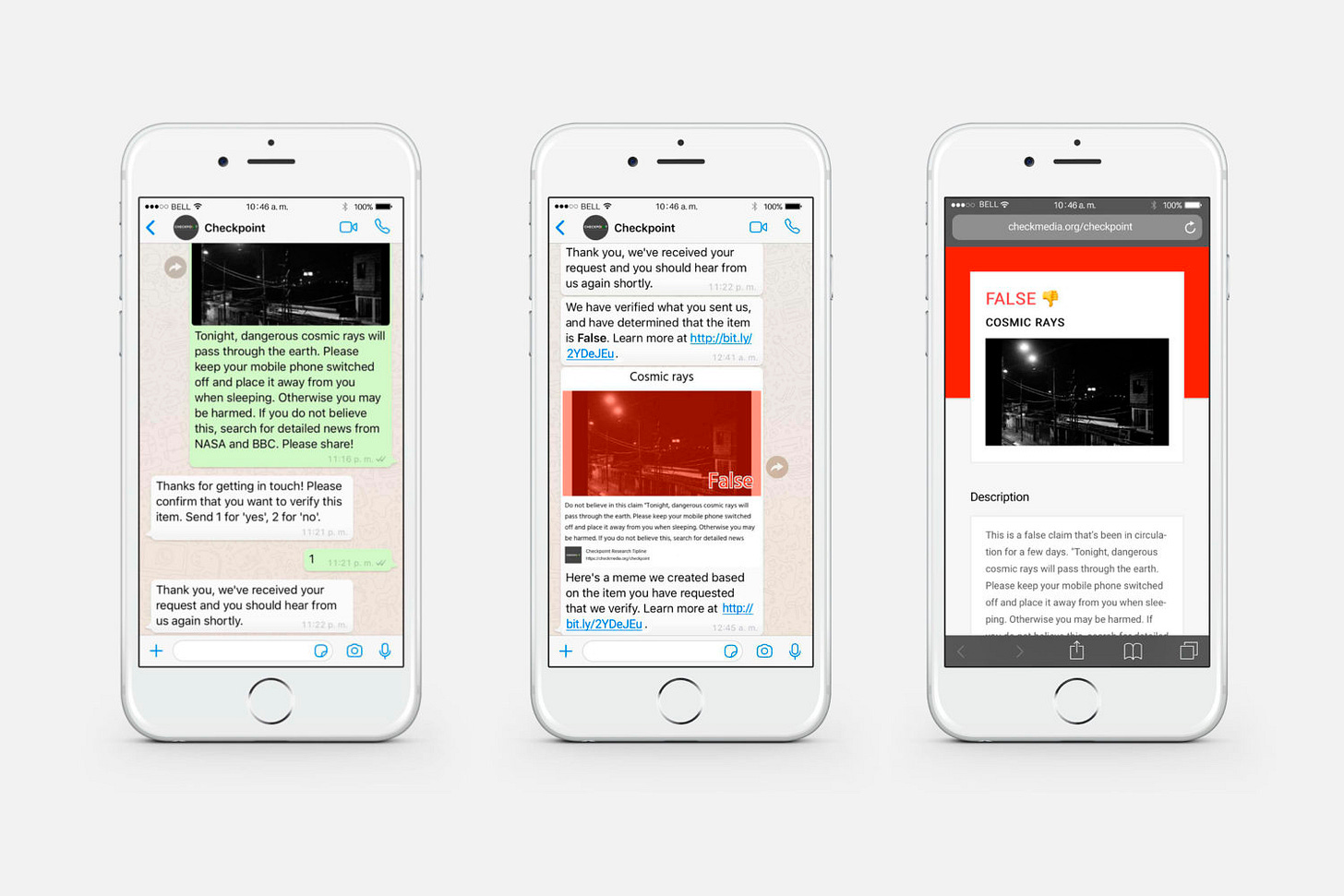

Augmented by AI: If AI can flood us with misinformation, it can also help filter it. Detect deepfakes. Flag inconsistencies. Provide provenance.

I like to consider myself a technoptimist, which is why I spend so much time trying to understand how digital shapes human behaviour. I want us to work with it, not be worked by it. So naturally, I don’t think that AI is inherently dangerous. But I do think that it’s really fast and that right now, it’s moving faster than our ability to verify what it produces.

This is why I think we need systems that aren’t about trust, but are about process.

It’s less about trying to get to this place of “I trust this headline” and more about getting to a place of “I understand how this information was built.”

Trust goes and sits and the back and informed awareness comes to the fore.

There’s hope, but it needs scale

In Taiwan, fact-checkers use AI tools to verify viral claims before they spread.

In the UK, Full Fact reviewed over 50,000 political claims per day using automation.

In Finland, media literacy is as essential as maths or reading. They’ve topped the European media literacy index (and the global happiness rankings) eight years running. Maybe not a coincidence.

Australia is trying. Slowly.

The AEC has a fact-checker. It’s under-resourced, but functional.

RMIT FactLab is training a new generation of verification-literate journalists (Meta suspended this program during The Voice referendum by the way).

The eSafety Commissioner is putting deepfake education on the radar.

ABC’s Behind the News is giving kids critical tools using memes, music, and relevance.

It’s patchy though and unfortunately we don’t have a national framework, or taskforce. This means we don’t have speed, or the funding. Until we do, we’ll be playing catch-up with our archaic systems that were never designed for AI-era complexity.

The future of democracy isn’t built on trust. It’s built on proof-of-process.

Elections are supposed to be a contest of ideas. Let’s not forget that. We need competing visions of the future so that citizens can decide what shared reality they want to live in.

But if AI fragments that reality, giving each voter their own emotional version of the truth, how do we choose anything collectively?

AI is just a system, and we need to build systems that let us live alongside it so that we can avoid being duped by it.

Democracy won’t die in a deepfake. It’ll die when we keep asking people to ‘just trust’ the systems that they have shown us time and time again that they no longer believe in, and fail to build the tools that help them navigate this era with clarity.

Trust is no longer enough. We need something better.